Hello, Today in this blog we are going to how to configure kafka and integrate with pega application.

If you have personal edition installed in your system then you will get installed with kafka so when ever you start pega instance it will start kafka server too.

But today we 'll see how to install kafka then configure kafka and then integrate pega with newly configured kafka server.

First of all you should have basic understanding of kafka ? why kafka ? if not look at from this link: https://www.javatpoint.com/apache-kafka.

In my coming blogs i will do kafka introduction blog too.

Now let's get start..

STEP-1: DOWNLOAD AND INSTALL KAFKA:

1. Firstl'y we need to install kafka from apache https://kafka.apache.org/downloads.

2. After downloading place the zip file in any folder you want to be and i have kept at C Drive.

3. After placing it in C Drive then export the zip file. After exporting you may see folder kafka wtih version number Ex: kafka_2.13-3.3.1 and if you open that folder same folder will be there again Ex: kafka_2.13-3.3.1\kafka_2.13-3.3.1 so please drag that folder and place it in C Drive to avoid folder inside the folder.

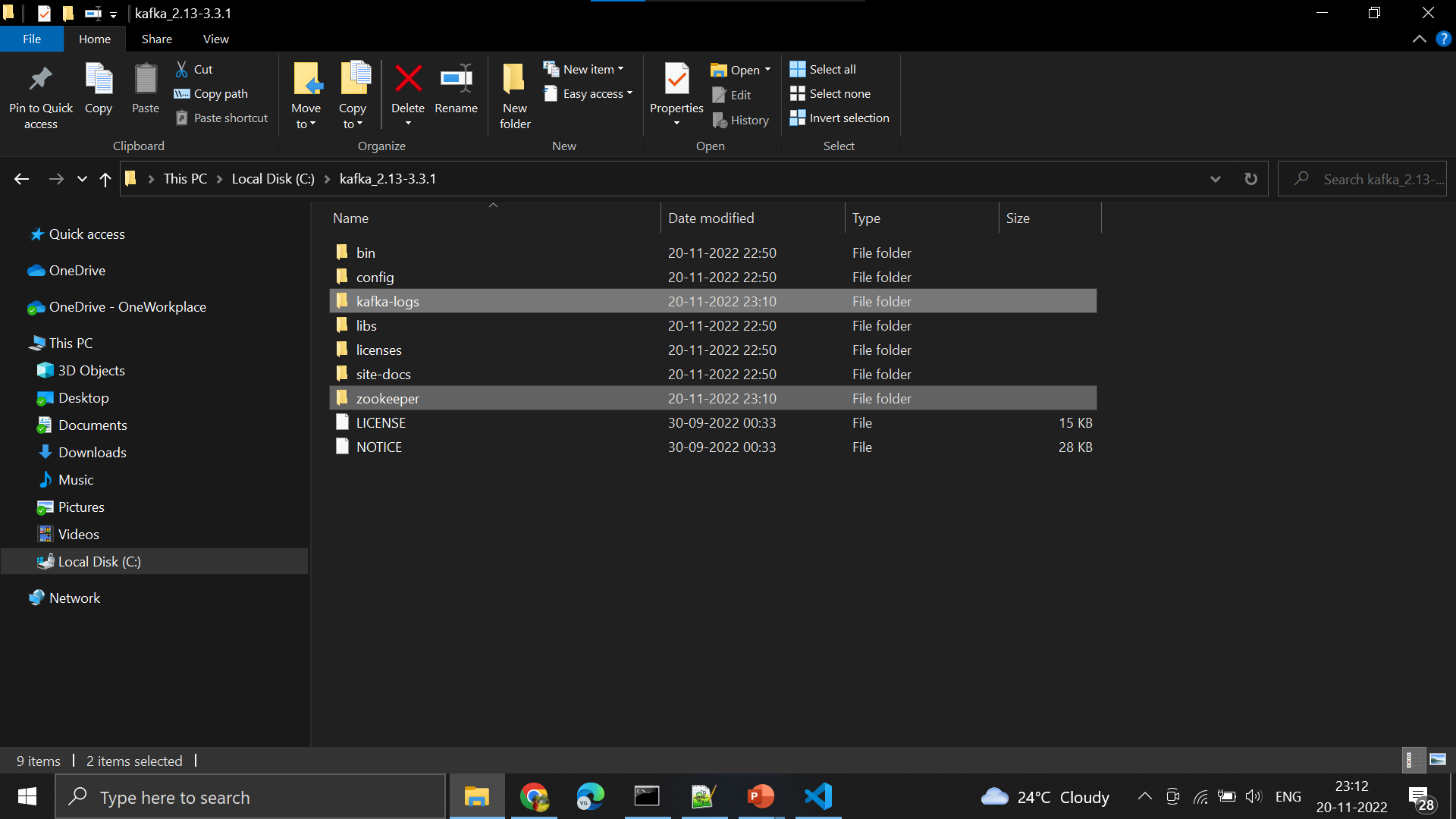

4. And after the above steps then open the exported folder Ex: kafka_2.13-3.3.1, Then you should be able to folders related to kafka like below image.

5. Then we have one problem here the kafka server which was installed with pega personal edition and which newly created kafka server will have the same server name's and port number's to avoid that we have to change the port number of kafka , zookeeper in the newly exported folder.

6. Note: we should give port number's which were not runing in our system so for that open the Command prompt and please enter the command > netstat -a

7. We can give the port number's which are not occupied by anything or any other application in system.

8. Now open the folder in C Drive which was earlier export [ kafka_2.13-3.3.1 ]. And th create two folders with names kafka-logs , zookeeper and copy the folder path like

C:\kafka_2.13-3.3.1\kafka-logs , C:\kafka_2.13-3.3.1\zookeeper.

9. So we will use this two folders for storing the meta data related to topics and kafka server related information.

9.1. And now open the config folder and the open the zookeeper.properties file from folder.

10. And please change the code in the above like mentioned below. dataDir=/tmp/zookeeper

-> dataDir=C\:\\kafka_2.13-3.3.1\\zookeeper

11. And change the port number from clientPort=2181 -> clientPort=2182 as pega personal edition zookeeper also contain the same port number 2181. after the modification please save the zookeeper.properties file and close the file.

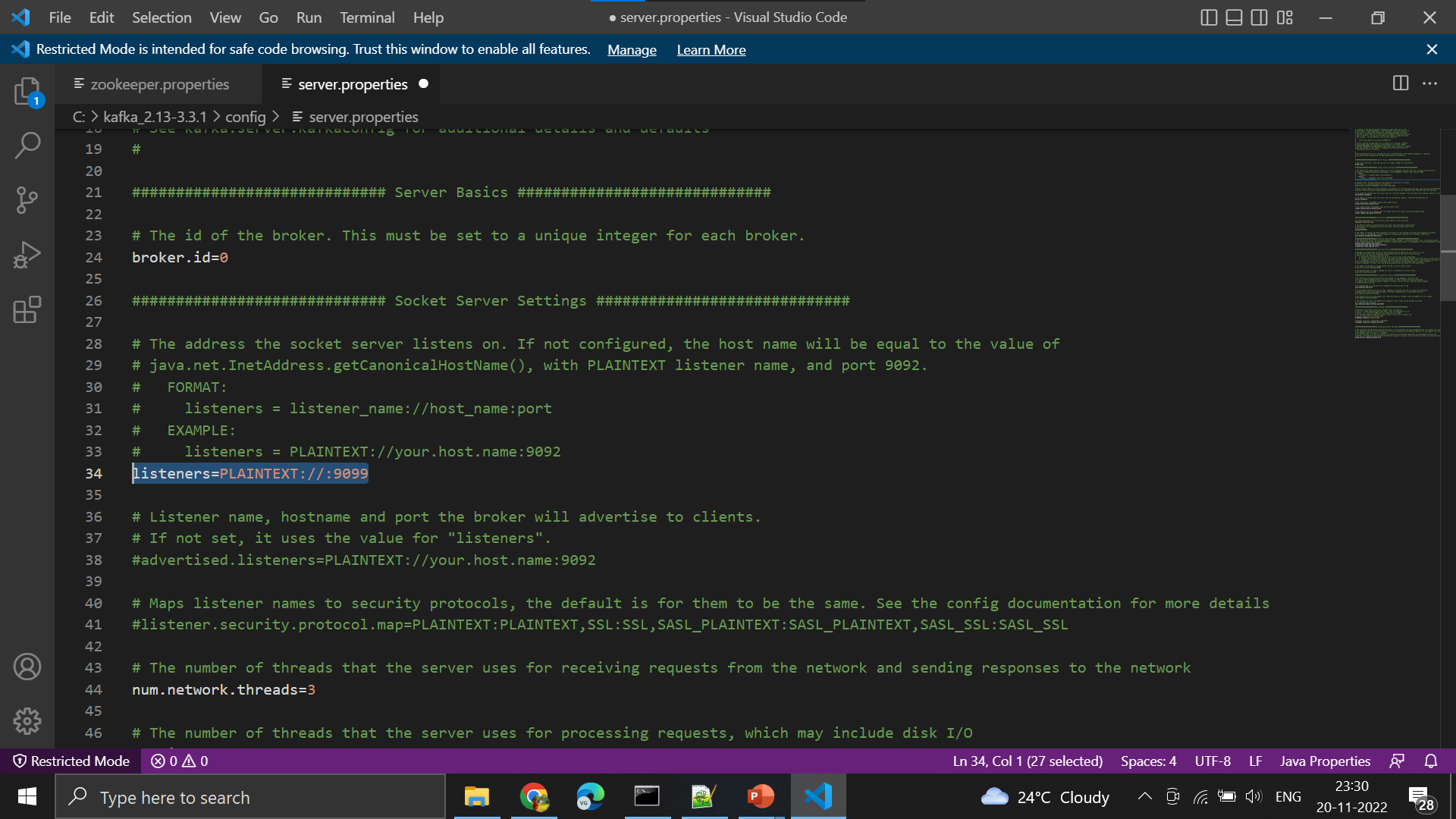

12. Now again open the config folder and now this time open the server.properties file.

13. It will look like the above image and now change the port number #listeners=PLAINTEXT://:9092

-> listeners=PLAINTEXT://:9099 we have uncommented the line and chnaged the port number of kafka serer from 9092 to 9099 because we have the same kafka server port number for pega personal edition.

14. And change the logs directory log.dirs=/tmp/kafka-logs -> log.dirs=C\:\\kafka_2.13-3.3.1\\kafka-logs to store the topics in that folder.

15. Finally change the zookeeper connection port number from zookeeper.connect=localhost:2181 ->

zookeeper.connect=localhost:2182 .

16. And now save the server.properties file and close the file.

STEP 2: START THE KAFKA ENVIRONMENT

1. Now open the kafka_2.13-3.3.1 folder in C Drive.

2. Then click the address bar to select it (or press Alt+D). Type “cmd” into the address bar and hit Enter to open the Command Prompt with the path of the current folder already set.

3. After opening cmd from current folder # Start the ZooKeeper service by typing the below command in the cmd and press enter. So that zookeeper service gets started.

.\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties

4. Zookeeper service was started it's look like the above image. Now it's time to open the cmd from the address bar of C:\kafka_2.13-3.3.1 folder, after opening cmd type the below command in cmd and press enter.So that kafka broker serice gets started.

.\bin\windows\kafka-server-start.bat .\config\server.properties

5.Kafka service was started it's look like the above image.

STEP 3: CREATE A TOPIC TO STORE YOUR EVENTS

1. Now we should create topic so that it will used for publishing and cosuming message.

2. Now it's time to open the cmd from the address bar of C:\kafka_2.13-3.3.1 folder, after opening cmd type the below command in cmd and press enter.So that the topic gets created.

here in the below command i have given Topic Name: topic_demo

.\bin\windows\kafka-topics.bat --create --topic topic_demo --bootstrap-server localhost:9099

3.We can see little waring about the topic name and we can see topic got created in kafka.

STEP 4: WRITE SOME EVENTS INTO THE TOPIC

1. We have alredy created a topic [ topic_demo ] so we will publish some messages from producer to test the server,

2. Now it's time to open the cmd from the address bar of C:\kafka_2.13-3.3.1 folder, after opening cmd type the below command in cmd and press enter.So that producer get's started for publishing the messages/events.

.\bin\windows\kafka-console-producer.bat --topic topic_demo --bootstrap-server localhost:9099

3. The producer started for wrtiting events/messages into topic topic_demo. Before that we should start consumer as well.

STEP 5: READ THE EVENTS

1. We have created the topic: topic_demo and producer for writing messages/events and we need to create the consumer for reading the events/messages.

2. Now it's time to open the cmd from the address bar of C:\kafka_2.13-3.3.1 folder, after opening cmd type the below command in cmd and press enter.So that consumer get's started for reading the messages/events.

.\bin\windows\kafka-console-consumer.bat --topic topic_demo --from-beginning --bootstrap-server localhost:9099

3. Consumer was started it's look like the above image.

STEP 6: TEST READ & WRITE EVENTS B/W PRODUCER AND CONSUMER.

1. Now you will have two command prompts, like the image below: [Producer , Consumer]

2. Now type anything in the producer command prompt and press Enter, and you should be able to see the message in the other consumer command prompt.

3. We have successfully configured the kafka server and the service.It's time configure it from pega platform application.

----***----

STEP 1: CREATE A KAFKA RULE

1. First open the designer studio in pega and create the kafka rule from Sys-Admin category.

2. I have given the name as "Kafka Connection" for id, you can give what you want to be..

3. After that click on Add host button in the kafka rule form, and enter details Host: localhost & Port: 9099 as it related to our kafka server port number. Then save the kafka rule.

4. After providing the details of Host,Port &Saving the rule,Now scroll below and click on the Test connectivity button at bottom of the kafka rule form to check the connection etablished successfully or not with kafka server.

STEP 2: CREATE A DATA SET RULE

1. We have to create a data set rule for kafka, this rule will be used for creating a new topic, publishing message. consuming message.

2. Create a data set rule from Data Model catgeory.

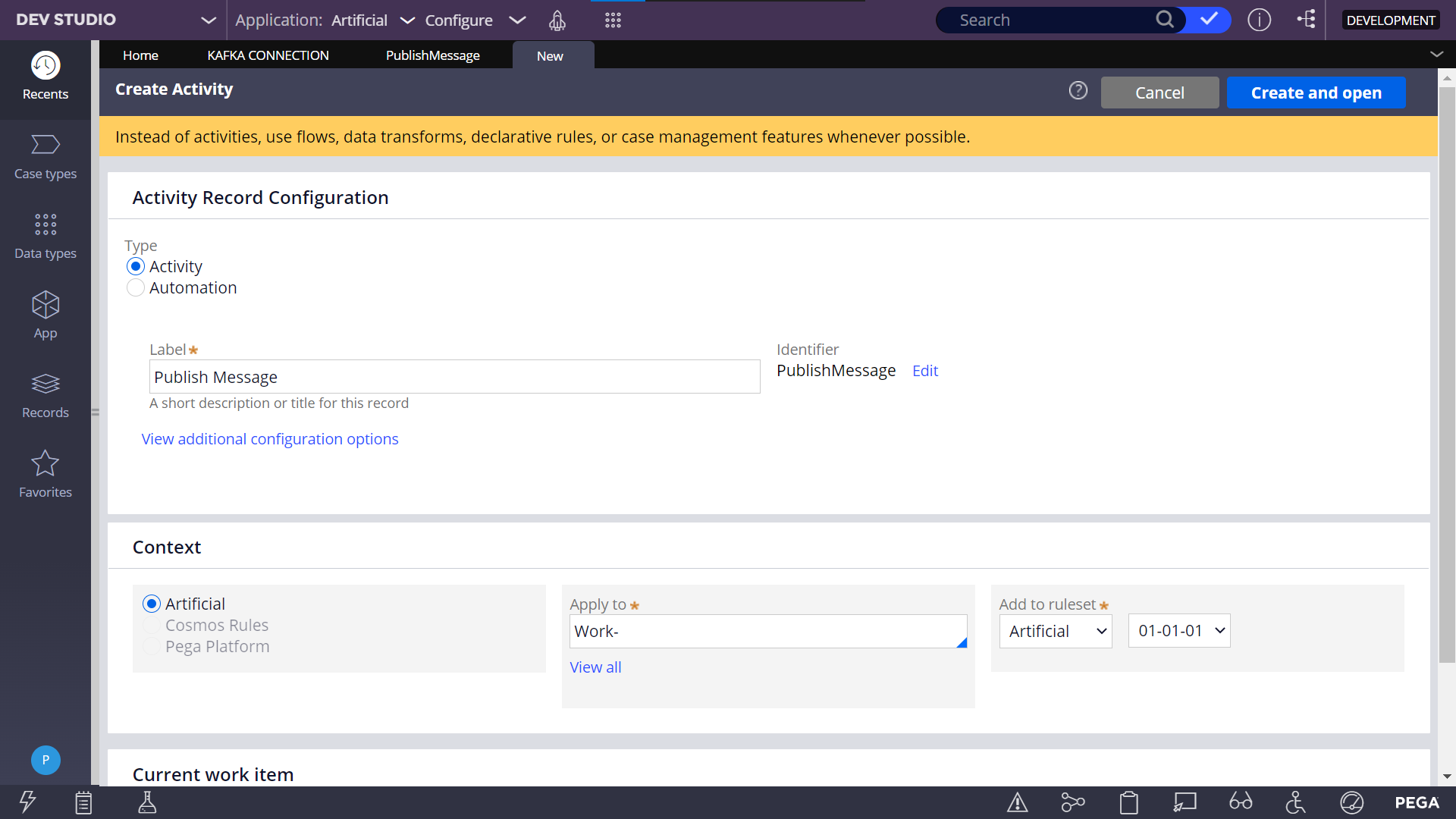

3. In the above image i have given Label as "Publish Message" for Data set rule and have select type as kafka in the drop down and have given Work- class or you can give class, label as you want to be.Then click on create and open button after providing the data in create ruleform.

4. Now in Kafka configuration instance call the Kafka Connecton Rule and after that click on Test connectivity.

5. Connection was success and now we have to specify the Topic.

Create new: If you want to create a new topic kafka server cluster then choose this and specify the name in the below field.

Select from list: If you want to use existing Topic from kafka cluster choose this option and select topic from the drop down field below.

Use application settings with topic values: If you know the topics and you want to specify according to the production level.

6. First we will create new topic and publish some messages to the topic in the kafka cluster.

I have choosen the topic name as: Pega_Topic, And you need to Add the partition key by clicking on Add key button so it will unique key for each and every record store in kafka topic parititon's.

And i have given .pyID as partition key.

7. Now in bottom of the data set rule form [ Message values, Message keys ] give it as JSON as the data format like the below image.And then save the rule.

STEP 2: CREATE AN ACTIVITY FOR PUBLISHING MESSAGES/EVENTS.

1. Now create an activity for publishing events/messages through the data set rule created earlier. create activity from Technical category.

2. I have given label "Publish Message" and given Work- class.After that click on create and open.

3. First of all go to the pages&classes and specify the KafkaPage with class Work-

4. In activity Step-1 is used to create the new page -- KafkaPage,

Step-2 is used to set the some details to the KafkaPage,

Step-3 used DataSet-Execute Method for calling the data set rule [PublishMessage] and specify the Operation as Save.

Step-4 Remove the KafkaPage from clipboard at last.

And then save the activity.

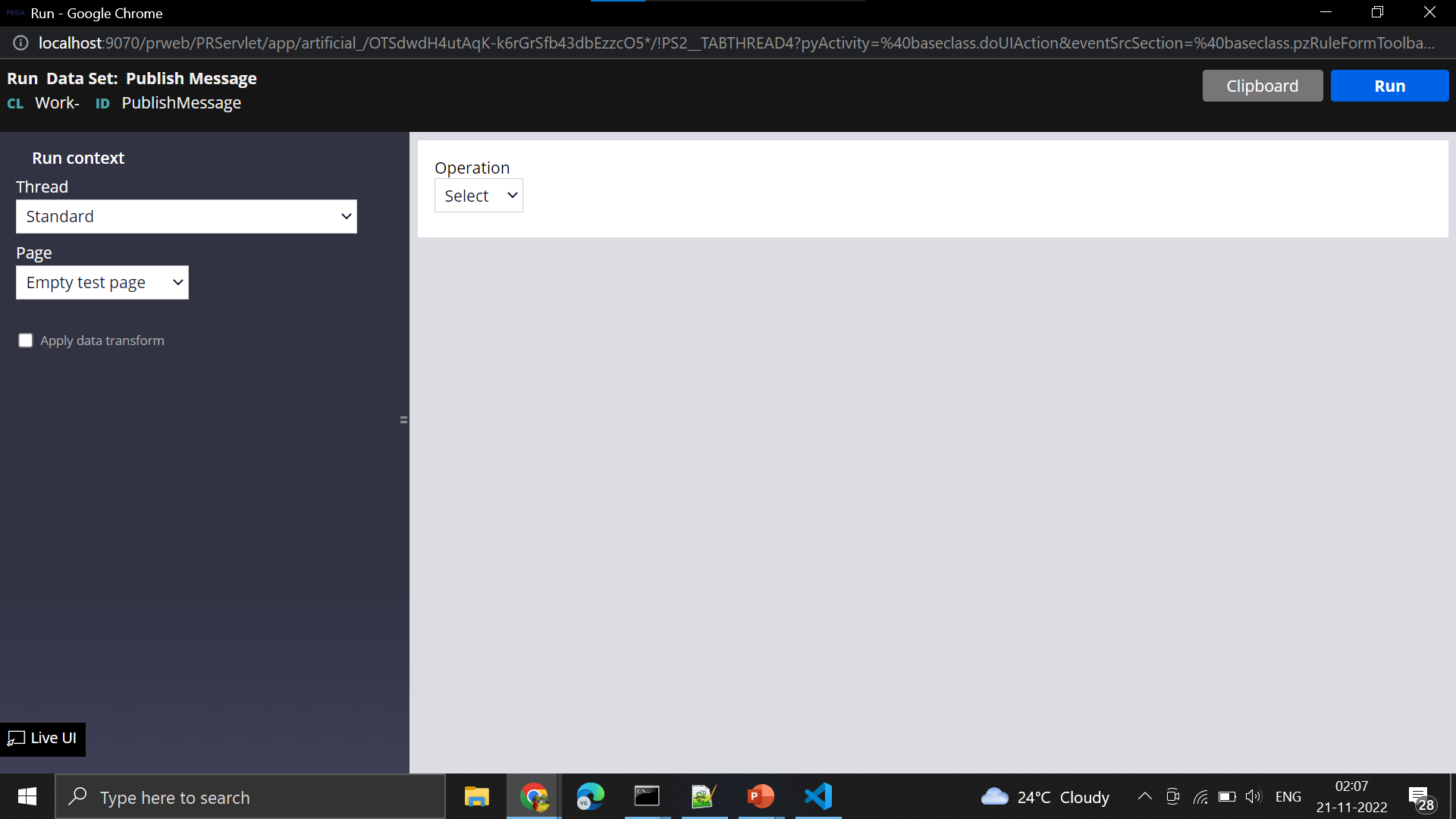

5. Now run the activity from Actions > Run.you should get success prompt window

6. Now let's test it by consuming messages of this topic from consumer command prompt, Open the C:\kafka_2.13-3.3.1 folder, click the address bar to select it (or press Alt+D). Type “cmd” into the address bar and hit Enter to open the Command Prompt with the path of the current folder already set.

7. Now enter the below command in the command prompt and hit enter to see the results in cmd.

.\bin\windows\kafka-console-consumer.bat --topic Pega_Topic --from-beginning --bootstrap-server localhost:9099

8. You can see the message which was earlier published from pega we can see that from consumer command prompt.

Now again test it by publishing another message from pega activity by changing the pyID, pyCustomer properties in the activity Step-2.

9. After runing activity, we can see it consumed another message in consumer cmd.Good it is working as expected.

STEP 3: FETCH RECORDS OF THE TOPIC IN PEGA DATA SET.

1. Now it's time to know how to fetch messages/events data from topic.

2. Earlier we have created Publish Message data set rule open that rule, click on actions > run.

select operation as Browse.Now click on run button on header.

3. Successfully we have fetched data. You should be able to see results like below in Data Set Preview.

It was the data which earlier we have published to Pega_Topic.

0 Comments